January 2025 BenGoldhaber.com Newsletter

building idea machines and sweating it all out this month

Life update! I’m excited to share that I’m joining the Future of Life Foundation.

FLF is hard to put in a box - part grant-maker, part strategic research group, part VC firm. But the through-line is figuring out how humanity can preserve control and agency as AI becomes more capable. Our strategy is to support and incubate new projects, primarily though not exclusively those which can harness advances in AI to improve epistemics, coordination, and other differentially beneficial technologies.

What does that actually mean? Well, here’s a toy model: for most of human history, mankind has called the shots. People have made the important decisions. Often it has been a limited group of people, but at the end of the day, people.

Not too long from now - and maybe the moment has passed already - humans and AI will be making the calls. This morning I used Gemini to write code to transcribe a series of recordings. Then I asked Claude to listen to my ramblings about a decision and model the underlying worldview I was advocating. It did a pretty good job.

Are these just tools? are they more? :shrug_emoji: Identifying the rubicon between tool and agent and when its crossed doesn’t seem that important to me. I’m a practical man - ignoring the fact I like to work at places with names like the future of life - and it’s self-evidently true that most of the consequential decisions from now to some number of years from now will be made in collaboration with AI.

On some level this is very exciting. Having 2024-level machine intelligence at my fingertips is empowering. Literally *right at this moment* anything you can describe can become a song, a poem, an image:

Unfortunately this doesn’t seem like a stable equilibrium - by default I don’t expect us to stay in this “human AI partnership” world for long. There are serious limitations we as a species face - we don’t think at the speed of silicon, we can’t make infinite copies of ourselves, we don’t recursively self-improve - that will push towards AI domination and human disempowerment.

… I’m less excited for that. I’d like us to collectively take our time, in this new era we’re entering, to really make sure that we’re building safe AI we can control which incorporates the values we care about, and use the enhanced science and technology we’ll have to create systems that won’t leave 99%-100% of humanity behind.

I’m optimistic we can do it. Technological determinism and naive accelerationism is bullshit - we can decide to build good things and not build bad things. Totally possible.

But in order to collectively do it, we’ll need new systems that enable us to coordinate and reason about how to do the good things and not the bad things in a fast moving chaotic time. We’ll need forecasting and planning systems that groups can use to coordinate action, trustworthy evaluations of AI systems that they’re acting in the end users interests, advances in multi-lateral bargaining and credible commitments, stronger verifiable security guarantees. Etc.

FLF is interested in spurring the creation of projects and ecosystems of projects that can help here, and in other areas that will be unlocked by AI advances. I think we can create the search function + roadmaps + initial funding to find great ideas and great founders, which I don’t expect to come by default from the market or from existing institutions.

There’s a number of things working in our favor:

People are seeing the world we’re moving towards, and after that initial disorienting feeling, they often want to find ways to help. So there’s amazing talent that wants to tackle these problems.

Advances in AI are speeding up the ability to build and try new things; making good plans and having clear, de-confused concepts can happen now, with more of the implementation done in the near future from now when automated engineering will be even more powerful.

Attempts at things in this area - new recommender systems, forecasting, accurate credit assignment, tools for thought, etc. have been tried before, and in my opinion largely failed, but I expect with an increase in automated decision making these ideas will see more adoption.

I’ll be building our portfolio of projects/people and helping founding teams move from idea to project to impact. I expect this to involve a lot of partnerships, fast moving experiments, and talking to weird people with crazy ideas1. In general this is the mode of operation that resonates deeply with my soul.

I expect to have more to share over the coming months; for now I’m wrapping up my time at FAR.AI - and I’ve appreciated all we’ve done together to build a good research community in FAR.Labs - and getting oriented to FLF.

This will be a dangerous path, requiring for success a realignment of our institutions toward differential technology development, an ability to provide not only market-supplied safety, but a major expansion in our ability to provide non-market safety. That's what it means to solve the Alignment Problem in the posthuman world. - Michael Nielsen

I hope FLF will help us collectively successfully walk this path!

Related Reading:

My current personal obsession is the Banya, aka Russian steam baths. Going for a long shvitz is how I honor my ancestors; old Jewish men who liked to sit for long periods. It also seems to have subtle positive mental effects, to the point where I can justify spending several hours on a Sunday evening because of the knock-on benefits for my sleep and productivity in the first half of the week.

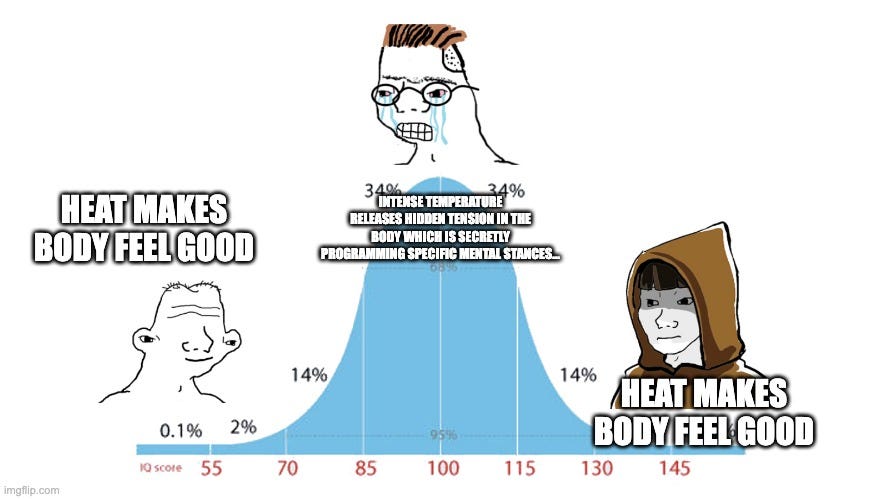

The rationalist in me has to have a model for why I think I think better after a sauna sess, and the ‘vasocomputation’ theory making inroads on Woo twitter is intriguing.

We’re sort of fish-in-water in that we all have a default stance, i.e. a pattern of tension we habitually hold to feel like ourselves. Some of this tension is likely latched, and some isn’t; unlatched tension can be relaxed on the order of seconds-to-minutes if we find the right prompt, whereas latched tension will be constant across all situations until it’s explicitly released. What Buddhism calls the “self” will be a combination of latched tension (vascular muscle that is physically glued into a contracted state) and tension that is merely habitually clenched (i.e. *not* physically latched)

I’d bet against this particular theory - largely on priors that any given theory like this is wrong - but I’m enjoying the search for why my brain is better on steam.

An alternative theory:

PersonalityMap - One million+ human correlations which you can search through with natural language. It’s ready to support your arbitrary ad-hoc personality quiz.

What really happens inside a dating app: Lots of data and qualitative takes on the dating app marketplace:

The other thing that interests you is the like ratio, or the openness, among 100 profiles that the user sees, how many of them does he like? (The median for men is 26% and for women is 4%.)

The like ratio of a girl is almost independent of the profiles she sees. For example, if a girl has a like ratio of 5% and you remove 50% of the profiles, even if you remove only the profiles she will not like, her like ratio will still be 5%

The feed algorithm is the only thing that will impact the retention of users on your app. Everything else almost doesn't matter.

At the end the predictor of two people meeting with each other is not a match or that the conversation started well. It is just the fact that the two really wanted to meet each other.

Profile pictures: This is the only thing that matters for a guy on a dating app, we had guys going to 0.03 ratio to 0.2 ratio just by changing their picture.

Interesting throughout. I feel like dating apps and their mechanics get a lot of attention - dating is fun to talk about! - but maybe they deserve even more attention. How couples match and form is important to society, and the systems we’ve built uh might not be optimal. Related: System 2 Recommenders.

Last Boys at the Beginning of History: It seems undeniable that there is more intellectual energy and vigor within MAGA then in other parts of political life. This was an incredible profile of that movement.

These young intellectuals call themselves—like pitch-perfect nineteenth-century Romantics—“sensitive young men.” At the after-parties they discuss metaphysics. Despite this being a D.C. social event, I don’t know where they work. It’s obvious, however, that some of the best congressional offices on the Hill, several conservative magazines and the D.C.-area universities are well represented…

At the last after-party in mid-December, I meet a distinctly new kind of conservative. “I’ve done ayahuasca eleven times,” he tells me. He is close to thirty, I think, and has been introduced to me as a great scholar of the Bronze Age, or so I hear with excitement—it turns out he’s an expert on Bronze Age Pervert. “Each time I’ve gone to the ritual in the Amazon, I’ve brought Thus Spoke Zarathustra there and read it.”

“During?”

“No, not during,” he says, as though I ought to know how one typically reads Nietzsche at an Amazonian ayahuasca ritual. “Before and after. Chapters at a time.”

“The last time I did, it”—the ayahuasca, he means—“told me to never return. And I haven’t since.” He pauses and looks thoughtfully away from me; he sighs. “I came away from it certain that modernity must be destroyed.”

Always Start with a Model: Good general advice from Byrne on being explicit about your model when making decisions:

You can think of a thesis as a way to ensoul assorted facts and start making them dance in the right direction. Even if the thesis is wrong, acting on it means testing it, and that's the only way to get it right.

I appreciate the simplicity of the AutoFocus system of todo management:

The system consists of one long list of everything that you have to do, written in a ruled notebook (25-35 lines to a page ideal). As you think of new items, add them to the end of the list. You work through the list one page at a time in the following manner:

Read quickly through all the items on the page without taking action on any of them.

Go through the page more slowly looking at the items in order until one stands out for you.

Work on that item for as long as you feel like doing so.

[A few more steps but that’s basically the gist]

Note: there were too many AI updates this month for me to choose from; if you’re trying to pursue the fools quest of keeping up with all this I continue to recommend Zvi’s blog as a good first stop.

#good-content:

The Hateful Eight: Rewatched the Quintin Tarantino film about the post-Civil War West and loved it, more than the first time. For a violent, dark movie, there’s an inherent relaxing quality to it. It takes its time; no quick cuts, long drawn out shots, lots of character work.

The Gentleman: A Guy Ritchie created TV Series, it has that action-comedy flair that he’s so good at, along with his tried and true English Gangsters plotlines. I’m not sure it counts as ‘quality’ TV, but it’s fun and compelling and by the end I didn’t even need subtitles.

xoxo,

Ben