Hello!

As I alluded to last month, April and May have been quite busy, with the culmination of a project to draft a paper which we released in mid-May titled “Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems”.

It outlines the convergence of multiple AI safety researchers' agendas to a shared framework. This framework is composed of a world model, safety specifications, and a verifier, which collectively aim to generate high-assurance, quantifiable guarantees about the safety performance of AI systems.

Breaking that description down:

World model: The environment the AI system will be operating in. It needs to answer queries about what would happen as a result of the AI’s outputs. For instance, you might have an AI system that is optimizing warehouse operations. The warehouse world model should be able to answer questions like 'Given an action, what will the end state of the box layout be, i.e., where will the boxes and cargo end up after the action is performed?'.

Safety Specifications: A description of the desirable safety properties we want, expressed in terms of the world model. For instance, in the warehouse operation, we want the AI to prioritize the safety of the employees, and to only allow plans that ensure no one is harmed when they are moving boxes. A safety specification would formally express that desire.

Verifier: A component that takes the safety spec, the world model, and the AI to produce a 'formal guarantee,' which is a bounded probability of the likelihood of failure. For instance, you could imagine in a complex warehouse, a very aggressive optimization from the AI might only be able to match the safety spec nine out of ten times. Alternatively, it might only be able to guarantee minimized harm to warehouse operators in a specific, given zone of operation. The verifier would produce such quantifiable proofs.

There are many reasons this type of approach is desirable (check out section 2 and section 5 of the paper for more), but one I particularly want to emphasize is that it can generate positive safety cases - evidence and theory that a system is safe in a given operating environment, not simply that we haven’t seen it fail yet. Requiring strong positive safety cases is a common practice in many safety-critical fields. An illustrative example from the history of civil engineering:

About 25% of bridges built in the 1870s collapsed within the decade, before a deeper theoretical understanding of civil engineering reduced bridges' failure rates to less than 0.4% per decade.

When quantitative safety assessments are required from developers, this makes it possible for society to mandate a clear level of safety.

It is clear that the trend is towards greater and greater integration of AI into all parts of the economy, and society. It would behoove us to architect approaches to AI which can give us quantifiable assurances of their safety profile.

We started and finished the paper, along with two workshops, in a little over three months - a sprint from start to finish!

It was quite an experience working with and supporting such an incredible group of co-authors - truly world-class researchers. A particular shout out to our coordination and drafting team of davidad, Nora Ammann, and Joar Skalse.

It’s also been quite an experience to see the reaction, both positive and critical, to the paper. My favorite twitter thread, ‘from a curious reader’, perspective comes from Michael Nielsen.

Personally, I’ve come away from the project with an appreciation both of the promise of GS AI, and of the challenges: having detailed enough world models and being able to express complex value laden concepts like harm in formal methods, is, to put it mildly, challenging.

I remain optimistic in part because the same advances in AI that we’re worried about should also unlock new methods of scaling the creation of safety specs and world models. Also, even if it turns out we can't model the entire world, there are important and significant near-term safety gains to be made from modeling safety-critical infrastructure.

I’m also encouraged by what I see as the opportunity here, within this framework, for builders and entrepreneurs. There’s a need for conceptual breakthroughs and deep research; however, there’s also a need for experiments and real prototyping in safety engineering for these types of systems. For instance how do we apply this architecture towards the power grid? Towards data centers? Towards systems where human agents are being influenced by AI generated information?

The AI Safety problem will be a world-wide question, and starting to build systems that can harness AI in a positive way in these sectors and industries, which compose together into a complete, quantifiable set of guarantees, well that’s the utopian dream I’m here for.

Alright, I hear you, enough of that rationalist scifi nerd stuff; lets swap over to a different player character builds:

On my meditation kick this year, I’ve started deliberately practicing relational meditation.

It’s like multiplayer meditation: in a small group, or one on one setting, you bring your attention to yourself, to the other person, and to the interpersonal connection, to ask the question of ‘what is happening here for me and for us’. My favorite description of these practices comes from Vaniver:

like a meditative practice, it involves a significant chunk of time devoted to attending to your own attention. Unlike sitting meditation, it happens in connection with other people, which allows you to see the parts of your mind that activate around other people, instead of just the parts that activate when you’re sitting with yourself. It also lets you attend to what’s happening in other people, both to get to understand them better and to see the ways in which they are or aren’t a mirror of what’s going on in you. It’s sometimes like ‘the group’ trying to meditate about ‘itself.’

Is that of interest? Here’s a one-ish question form to fill out if you’d like me to potentially reach out about practicing it sometime!

#links

Sasha Chapin’s 50 things I know: some good takes here.

I know that hospitality is one domain in which giving is receiving. I’ve regretted spending money on many things, but never food I’ve fed to friends.

I know that sometimes people unfairly collapse reality via cliche. Your money management issue might be described as a “first world problem,” or your changing desires a “midlife crisis,” by yourself or others. This is a way of dismissing your complexity.

The Secret To Hosting A Party For The Ages: A personal essay from the premier party planner of the 1930’s on the art of hosting events.

Carefully studied effects must appear just to happen, and the joy of the hostess in her own party must be the first element encountered by a guest… I have often been moved to sudden inward unholy laughter when, upon entering salon or ballroom, I first catch sight of the harassed and anguished face of an unhappy hostess, so obviously suffering at being trapped by her own party in the doorway

Also, people should not be invited because one dined with them last week – or because you owe them a lunch – or because your father plays backgammon with their father at the club – or because a friend asks to bring a friend… Ruthlessness is the first attribute towards the achievement of a perfect party.

Then there is the deliberately casual hostess, who prides herself in letting her guests do what they want. This is a great mistake. No guests want to do what they want – everything must be done for them at a successful party.

She really captures how a good ‘happening’ has this balance of joyful spacious effortlessness and, behind the scenes, disciplined focused execution. The art of the party is on my mind after organizing the GS AI workshops and generally trying to level up my hosting game. As one, uh, notable entrepreneur put it, ‘coordinators are that which is scarce’, and throwing a good party is, in essence, coordinating well.

Neurotech Kit: An open source neuro-simulation environment.

Developing hardware and experimental design within neurotech companies often starts with or heavily uses simulation, and well-documented and open packages allow anyone with software skills to contribute to the space. Furthermore, given the speed of iteration in simulation, I could imagine these packages having an impact on the state of the art similar to what we've seen with robotics and reinforcement learning in the past, where simulation is a key part of making faster progress…

I think some of the most impactful future neurotech devices will be completely noninvasive, and the first use case for NDK is for modeling transcranial ultrasound for neuromodulation.

#good-content

The Diamond Age: Or, a Young Lady’s Illustrated Primer: Another reread; I seem to be trending towards re-reading favorites instead of new books? If you haven’t read it yet, this is another classic of the genre - many technologists have been inspired to create the Young Lady’s Illustrated Primer, and whether or not that is a good dream, the vision of Diamond Age remains one of the best examples of compelling world building. One of my favorite quotes from it:

It's a wonderful thing to be clever, and you should never think otherwise, and you should never stop being that way. But what you learn, as you get older, is that there are a few billion other people in the world all trying to be clever at the same time, and whatever you do with your life will certainly be lost — swallowed up in the ocean — unless you are doing it along with like-minded people who will remember your contributions and carry them forward.

Espresso: I’ve had Sabrina Carpenter’s song stuck in my head the past few weeks, strong Song of Summer contender.

I also learned in May that Charles Manson made a pretty good folk song, so theres that.

xoxo,

Ben

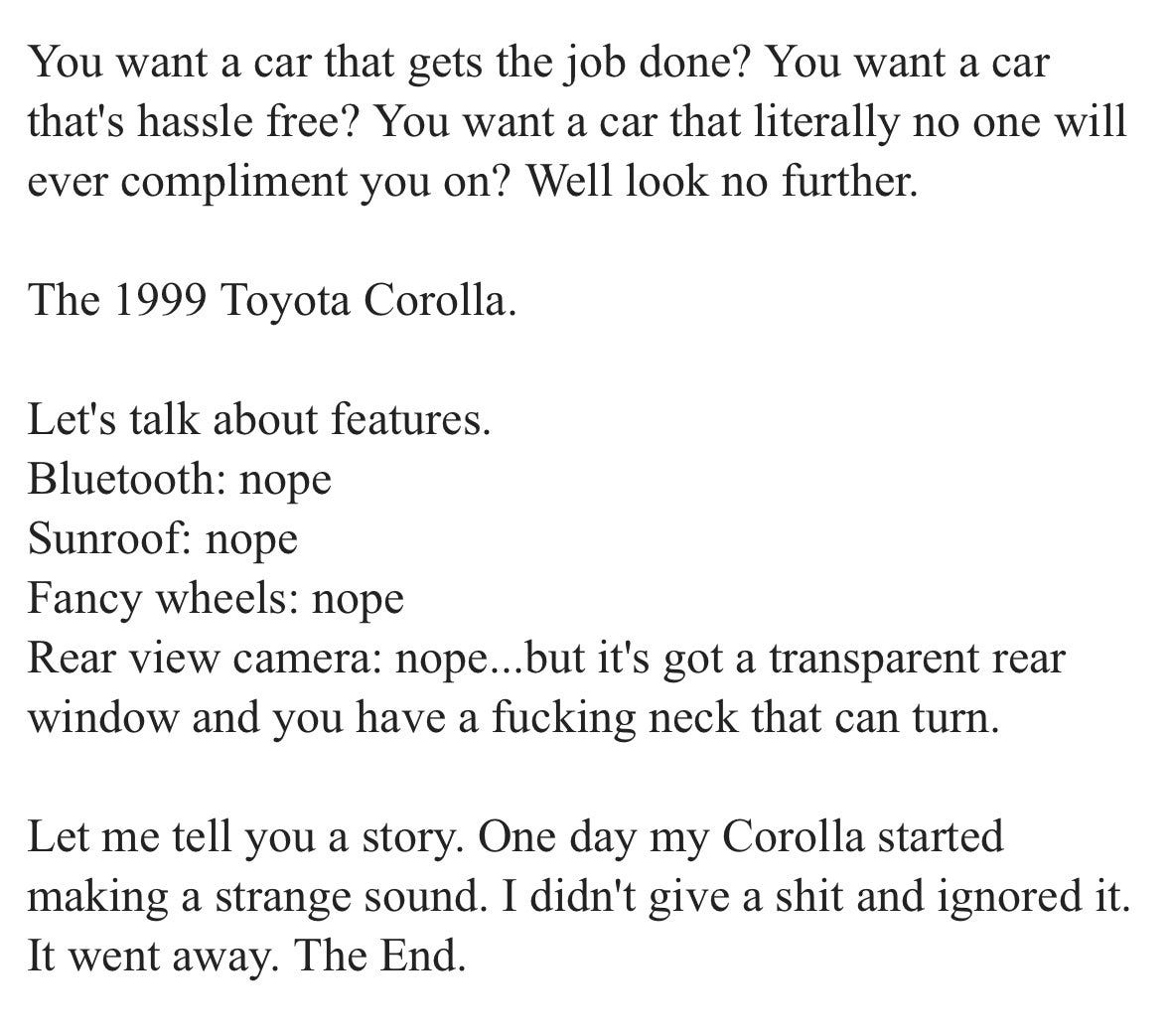

p.s. A 1999 Toyota Corolla Cragislist Ad that makes me want to buy several.