Product Recommendation from June: Cora Email. It’s a lovely little app that sits on top of your email and summarizes them into twice a day briefs. I like how Geoffry Lit described the key product insight:

It turns email from an inbox into a feed. Inbox = tacit obligation, you must complete every one of these items. Feed = dip in when you want, no obligations - like Twitter.

The default metaphor for email is an inbox, which is completely insane when you think about what comes in there. Cora auto-archives most of your email. Things only stay in your inbox if it thinks you need to reply. And then you just skim a couple briefs every day.

I had abandoned my personal email as a polluted superfund site, and Cora has helped me reclaim it. I do have some general security/privacy concerns but I’ve made peace with the fact the future requires me to sacrifice that for productivity and happiness.

Last month I attended Manifest, the 'forecasting' festival. I air-quote forecasting because the event wasn't really about making predictions - just as forecasting's main value isn't the forecast itself but the process of thinking through problems and discussing them with others. Manifest was good because it was a get together where forecasting was low strength beacon to bring the type of people interested in this intellectual milieu together. My favorite talk was from a professional trader - not the the financial kind, but the trade anything everywhere all at once kind. Arms deals to African countries, Facebook Marketplace mid-century modern furniture, anything. It turns out the people who would sail across the world on the off chance of opening a new silk route are still out there, and they’re now all hanging out at the TSA seized goods warehouse in Austin.

Forecasting AI is still falling well short of top human forecasters. In the Metaculus Bot competition, teams of human forecasters continue to outperform the bots, and despite model improvements the gap has remained for the past three quarters.

My prediction that AI will be at human forecaster level by end of this year is looking suspect. I’m confused at what’s happening here, and my best guess is it’s the same bottleneck holding back general AI agents; it needs more context and suffers from distractible fragile chains of thought.

I do wonder though if these bots were engineered at the same level as Claude Code, or with better RL, if the gap would be much narrower.

Speaking of distractible agents, Anthropic’s Project Vend experiment was pretty delightful. Anthropic set up Claude to run a vending machine, choosing the prices and inventory and interacting with customers. Claude was too helpful and harmless, and was prone to giving steep discounts or ordering for staff untraditional items.

Claude was not successful at running a profitable business, but has provided more evidence for my thesis that CEOs will get automated before line workers:

The Community Notes team at X launched an AI Writer API, for users to create AI’s which assist in writing community notes to fact check and provide context across a range of tweets. I like their focus on scaling up coverage through AIs, while humans - at least for now - are the primary scorers and raters.

I’ve been searching for Office Art that incorporates 1.) future oriented themes 2.) speed and dynamism 3.) powerful vibes. Of course this drew me to Italian Futurism - here’s three different ones I (aka gemini/midjourney) made:

Peter Barnett created a far superior piece capturing the MIRI philosophy.

In the - I guess we’re calling it the Twelve Day war? - between Iran and Israel ft. US, I was inspired by the the story of the DoD analyst who spent 15 years modeling and designing the bomb to blow up the mountain the Iranian nuclear facility was housed in:

He studied the geology. He watched the Iranians dig it out. He watched the construction, the weather, the discard material, the geology, the construction materials, where the materials came from. He looked at the vent shaft, the exhaust shaft, the electrical systems, the environmental control systems, every nook, every crater, every piece of equipment going in and every piece of equipment going out.

If we can set aside any of the complex political, moral questions of war, I’m just glad the guy got to see it actually happen.

The Israelis have been shockingly effective at starting - and more or less immediately ending - wars with intelligence operations. One explanation is that Iran is an incredibly corrupt country (a thread from Russian milbloggers):

14/ "In 2024, one of the leaders of the Hamas Politburo, Ismail Haniyeh, was killed in Tehran. There are two versions of this death: that a bomb was planted in his room, and that a short-range projectile was used from Iranian territory.

15/ "There is a possibility that Haniyeh’s security guard was involved, and they even announced the amount paid to him: 6 million dollars.

16/ "Whether this is true or not, I don’t know. But I have been to Iran many times, and the corruption there amazed even me after living in Russia. They continue to take from us [in Russia], but not everything. They take EVERYTHING there.

27/ "Therefore, Israel felt like a fish in water in Iran. It could hire agents everywhere for good money, which was used to destroy the Iranian air defense on the very first day of the attack.

Infiltrated Israeli drones disabled Iranian air defenses; similarly Ukraine successfully used smuggled drones to destroy many of Russia's strategic bombers. While drones are clearly central to modern warfare, a recent review (h/t Will Eden) on autonomous drones suggests that limitations in integrating data and realistic training environments are preventing full autonomy:

One Ukrainian drone manufacturer, who has been testing machine vision drones for almost two years, stated in May 2025 that the technology for these drones is still 'raw' and works 'mediocrely' on tactical drones used along the frontlines.

My guess is this is another area where advances in software and computer use agents will apply too.

I’m always going to link to a Ross Douthat interview of Peter Thiel. I particularly enjoyed the discussion (starting at 39:30) on whether Transhumanism is a Christian goal.

Thiel: But I also would like us to radically solve these problems. And so it’s always, I don’t know, yeah — transhumanism. The ideal was this radical transformation where your human, natural body gets transformed into an immortal body… And then Orthodox Christianity, by the way — the critique Orthodox Christianity has of this, is these things don’t go far enough. That transhumanism is just changing your body, but you also need to transform your soul and you need to transform your whole self.

Douthat: Wait, wait. I generally agree with what I think is your belief, that religion should be a friend to science and ideas of scientific progress. I think any idea of divine providence has to encompass the fact that we have progressed and achieved and done things that would’ve been unimaginable to our ancestors.

But it still also seems like the promise of Christianity in the end is you get the perfected body and the perfected soul through God’s grace. And the person who tries to do it on their own with a bunch of machines is likely to end up as a dystopian character. And you can have a heretical form of Christianity that says something else —

They then have a rousing discussion of whether Thiel is the Anti-Christ.

Law: AI Rights for Human Safety. I’ve gotten very interested in the question of whether we should extend property rights and certain types of enfranchisement to AIs. If we’re in a slow takeoff regime, where AI approaches and surpass general human intelligence over months to years, I’m somewhat optimistic that the systems we have for controlling and aligning people - aka laws and norms - might work on AI agents, and that by giving them some rights it will give them a stake in the current world. This is helpful for stability in general, and might incentivize them to similarly avoid/prevent others from creating superintelligences which might not share their values. Of course, I’m very confused about a lot of questions here - for instance what laws and norms work on intelligences that can copy themselves, that can run in parallel, that might have very different values, etc.

AI rights can make a real difference: for moderate power systems. These are systems whose capabilities fall between the low power and high power systems already described. That is, they are sufficiently immune to human control that, in the state of nature, attacking humans dominates ignoring humans. Such systems thus pose a significant threat to humanity. But they are not so powerful that they face no costs from a conflict with humans. Nor are they so capable that they have nothing to gain from small- scale cooperation.

Order: I think this startup using wilderness surveillance to prevent wildfires is a great example of an area - lets call it Civilizational Resilience Startups - that I’m betting is necessary and will take off:

Pano raised a $44M Series B for their wildfire early detection eyes-in-the-sky startup. Wildfires have only become more fierce over the years and now we have software, computer vision and smart cameras to stop them before they become giant & unstoppable

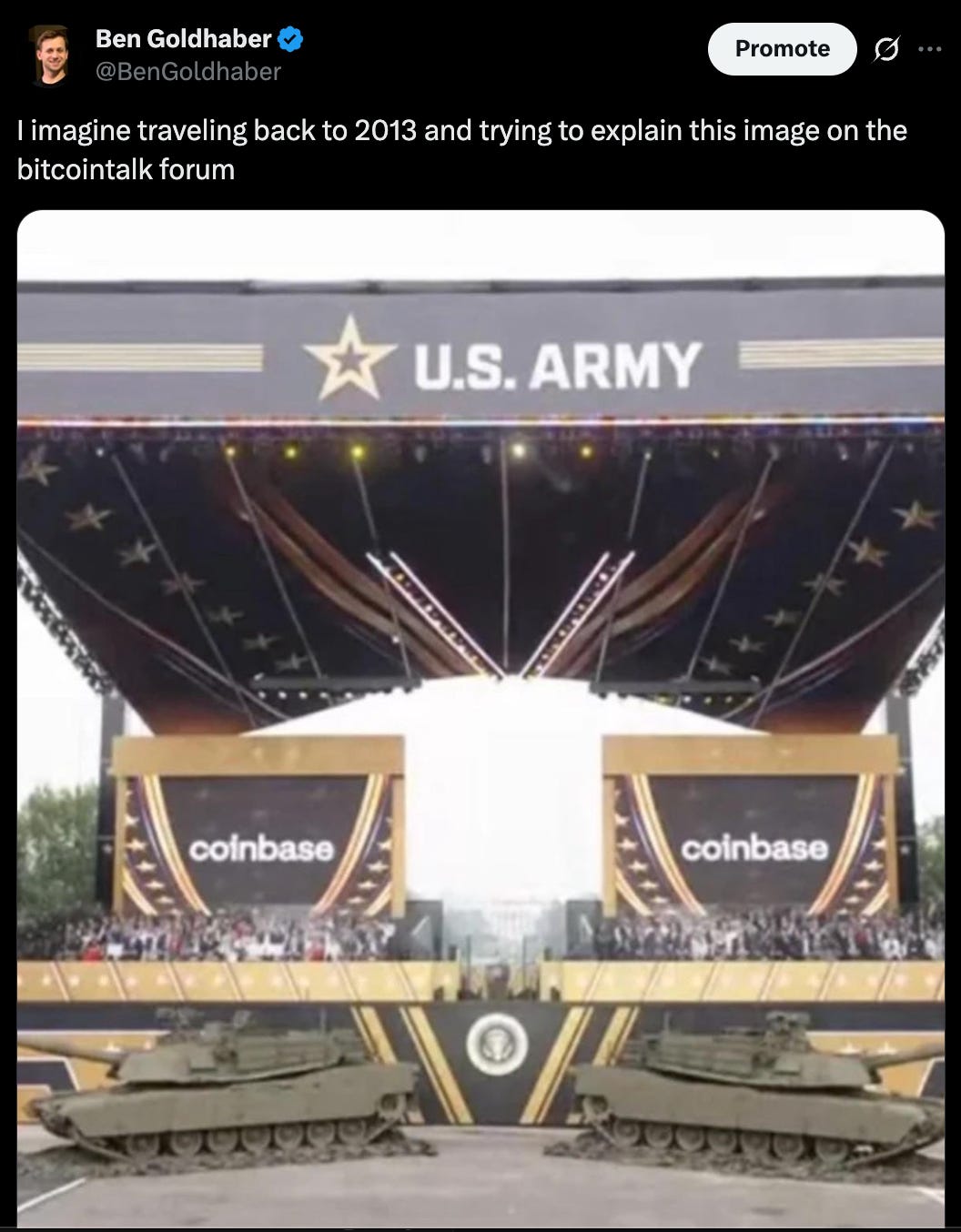

computer, please create an image depicting the most ironic possible fate of the cipherpunk movement:

xoxo,

Ben