Most people's life satisfaction matches their personality traits:

The Big Five domains and nuances allowed predicting life satisfaction with accuracies up to rtrue ≈ .80–.90 in independent (sub)samples. Emotional stability, extraversion, and conscientiousness correlated rtrue ≈ .30–.50 with LS, while its correlations with openness and agreeableness were small. At the nuances level, low LS was most strongly associated with feeling misunderstood, unexcited, indecisive, envious, bored, used, unable, and unrewarded (rtrue ≈ .40–.70).

I like the nuances section as a framework for thinking about where to intervene to be happier - feeling misunderstood, unexcited, indecisive, envious, bored, used, unable, and unrewarded correlate with unhappiness. Literally going through the list and seeing what areas of my life these descriptors has been a good spur for me to reflect on how to change these dynamic (ex. refocus, learn or outsource specific tasks, etc.).

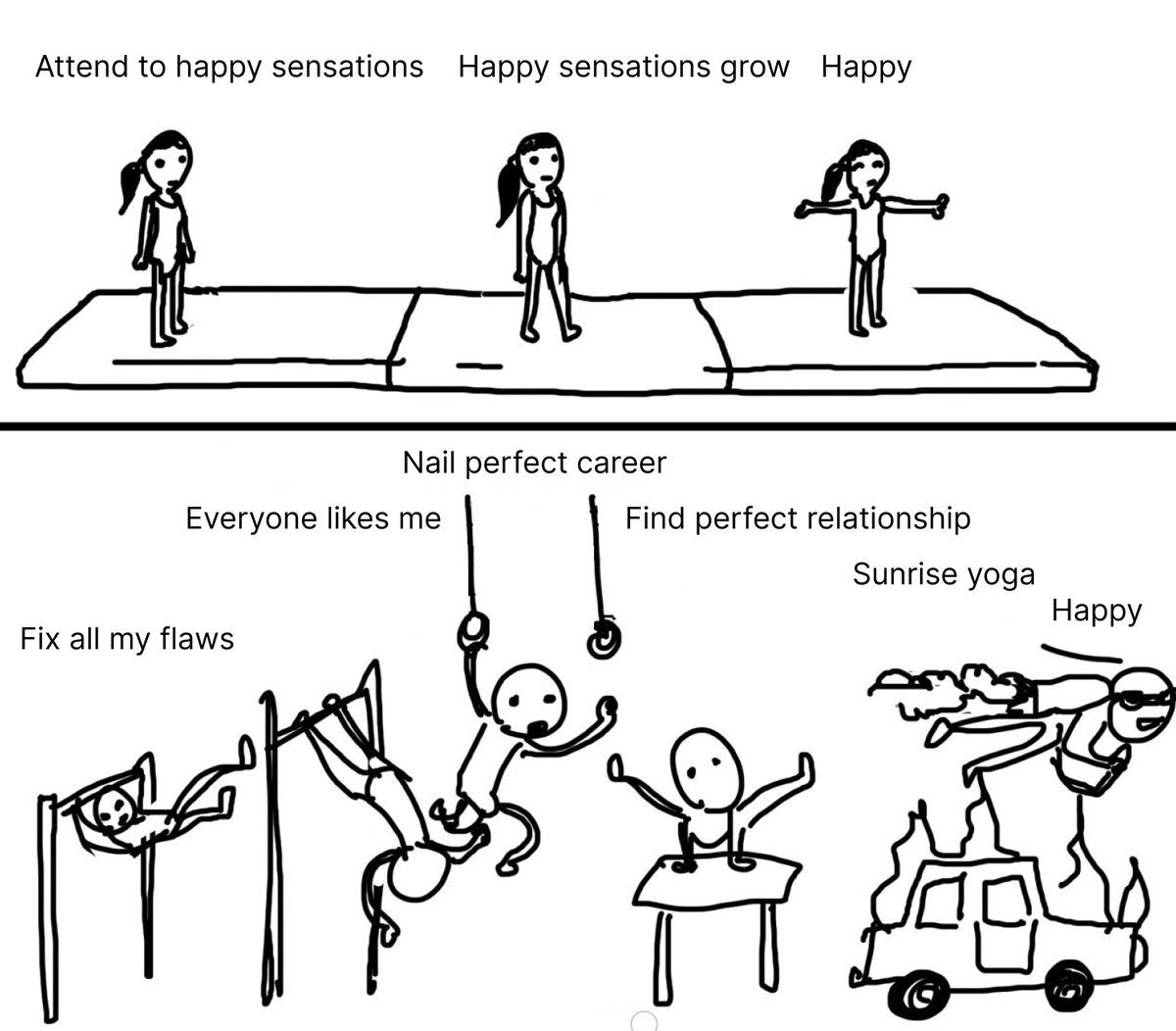

I’m of two minds when it comes to this type of personal development: on the one hand, there’s the approach where you rationally look at your life, factor it into different area where you’re happy and unhappy, take actions to improve it, and then notice how your life is better and you’ll feel happier. The study above, and using it as a guide, embodies this approach, and is good!

And on the other, you notice the way in which happiness is already here and it’s just a choice to be happy:

In that spirit, I liked Nadia Asparouhova’s how to guide for the Jhanas:

To access the jhanas, you basically induce the “opposite of a panic attack,” as I’ve heard others describe it… remember, again, that the number one most important thing is to relax, have fun, and don’t overthink it.

I don’t think the jhanas made me happy, but their biggest impact was enabling me to realize how happy I already was: I just had to direct my attention towards this fact, then update how I thought of myself. Now I embrace and see the joy in life’s moments, big and small, much more easily than before.

I’m not sure if I’ve accessed a jhana state yet, but I feel confident that this advice and that directing your attention towards happiness is a good strategy for being happier. Related: Sasha Chapin on the Spencer Greenberg podcast on raising our happiness baseline.

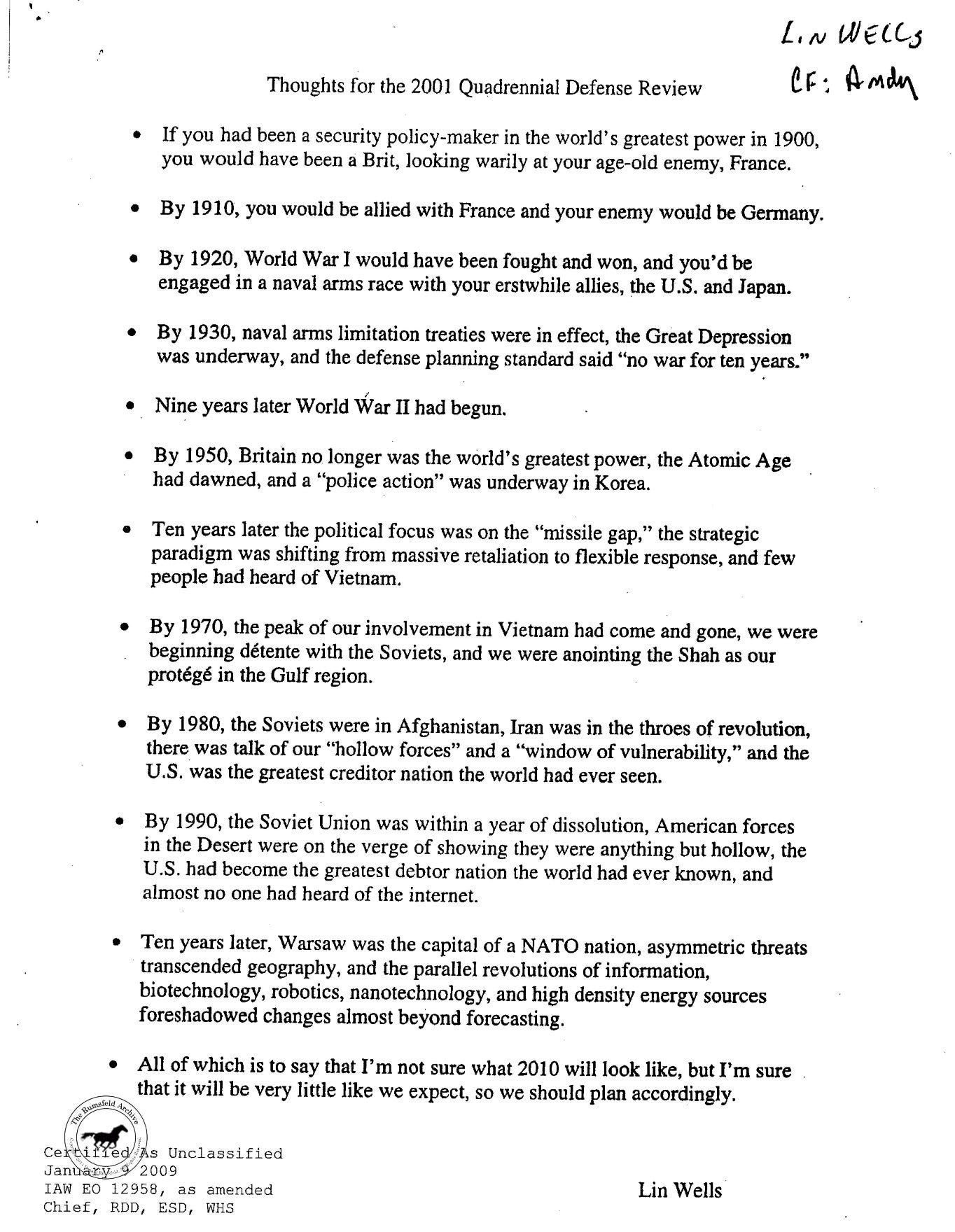

This month I read Leopold Aschenbrenner’s Situational Awareness: The Decade Ahead. Here’s my high level summary:

It’s credible that human level AGI is developed in the next three to four years. Continuing to scale compute will bring AI systems to the point where they can contribute to their own improvement, which will set off an ‘intelligence explosion’.

As AI becomes more powerful decision makers are going to wake up to AI developments and its potential impacts, and recognize that super-intelligence can provide a decisive economic and military advantage.

Governments are going to get involved, with the US and China starting to race and all of the downstream impacts from that (e.g. nationalization of AI efforts)

There will be massive investments in compute, with trillions of dollars going into compute, datacenters, and power.

AI Safety, from the perspective of models staying aligned with human values, is hard but doable with current approaches (‘superalignment’).

It’s important that the US and cosmopolitan values wins the race to super-intelligence, and we should start “The Project” of US controlled superintelligence now.

This sparked, unsurprisingly, a lot of discussion! A few selected takes:

Leopold on the Dwarkesh Podcast:

The government project will look like a joint venture between cloud providers, labs, and the government. There is no world in which the government isn’t involved in this crazy period. At the very least, intelligence agencies need to run security for these labs. They’re already controlling access to everything.

Peter McClusky reviews Situational Awareness

Aschenbrenner has a more credible model than does Eliezer of an intelligence explosion. Don’t forget that all models are wrong, but some are useful. Look at the world through multiple models, and don’t get overconfident about your ability to pick the best model.

Again, an economic growth explosion would be *cool*, that's my ideal outcome too, but I expect that it's less something that just pops out from auto-ML and more about developing good datasets and experimental feedback loops.

Rapid action is called for, but it needs to be based on the realities of our situation, rather than trying to force AGI into the old playbook of far less dangerous technologies. The fact that we can build something doesn't mean that we ought to, nor does it mean that the international order is helpless to intervene.

I’ve been mulling over what I think about the essay. A few thoughts:

I agree we might see super intelligent systems this decade.

Powerful AI systems will indeed confer significant strategic advantages. It’s not clear to me that they will be immediately decisive. In a gradual "intelligence explosion" scenario, I’d expect dangerous periods where one country is clearly ahead but not yet decisively, incentivizing preemptive actions by competitors.

People are waking up to this reality, but it hasn’t happened collectively - there will be a March 2020 moment for AI. I think many people and companies are underestimating how chaotic this will be, and how society will respond and look for groups to blame.

I would be more comfortable with the strong competition frame if I was confident that the super-alignment approach Leopold endorses will work. Given that I’m not, I’d prefer companies and countries work together, in particular on joint safety efforts.

The world stumbled it way through of a period of extreme nuclear risk and I continue to endorse, instead of racing, collectively working together to tiptoe through the intelligence explosion. I expect efforts to promote this type of cooperation, even if it’s in narrow areas, to be really important.

Importantly, we should consciously embrace the degree to which this is *not* how things will go.

Progress in automated software engineering: SWE-bench is a benchmark testing AI systems ability to contribute autonomously to software projects.

Come Together: Bridge RNAs Close the Gap to Genome Design: Seems like designer genomes are getting closer and closer to reality.

“The Xerox Alto was the first computer ever sold with a mouse and a graphical user interface (GUI),” said Hsu. “It was invented just off the street from where Arc [Institute] is today at Xerox PARC. It gave humans for the first time a simple and intuitive way to interact with information. Guide RNAs act like that mouse cursor to interact with nucleic acids in a large genome. What we’ve been doing so far is basically punching individual nucleotides and changing them, like punch-card programming. We want something that can operate at a much higher level of abstraction to design genomes. That’s where all of this is going.”

Surely you can be serious: Take the results of studies seriously and think about their implications:

I once saw someone give a talk about a tiny intervention that caused a gigantic effect, something like, “We gave high school seniors a hearty slap on the back and then they scored 500 points higher on the SAT.”1

Everyone in the audience was like, “Hmm, interesting, I wonder if there were any gender effects, etc.”

I wanted to get up and yell: “EITHER THIS IS THE MOST POTENT PSYCHOLOGICAL INTERVENTION EVER, OR THIS STUDY IS TOTAL BULLSHIT.”

I occasionally look at bar charts like this to remind myself how much of a bubble - surrounded by CS graduates and Vegans - the bay area is.

On the content front, I strongly recommend watching the new Game of Thrones, House of the Dragon. Season two has been great so far, and is living up to the best seasons of the original GoT.

Ben