Automating 5 Years of Link Extraction with Claude Code

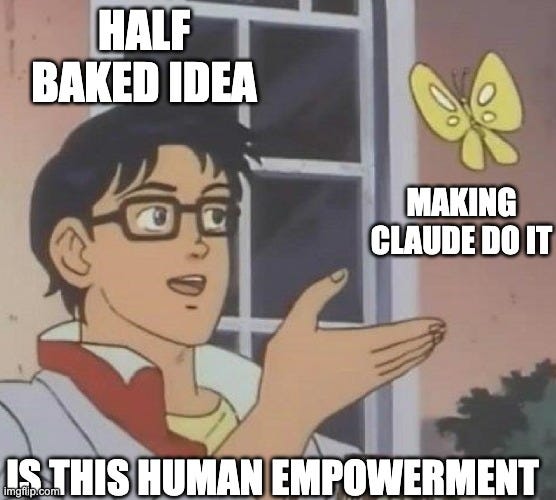

don't let your one-off text analysis ideas just be dreams

I’ve collected a lot of links at this blog. For the past five years - up until last week - I’ve almost exclusively been doing monthly roundups of links to things I’ve read or enjoyed, which I’d share along with my inspired commentary.

But outside of my blog, they’re not organized anywhere, and it would be great to have them in a single place that I could search or randomly surf to jog my memory of what I was thinking about in October 2022 (apparently Lasik!).

So I wanted to extract all the links from my blog and create Obsidian notes for each of them. I didn’t quite know what I wanted when I started, but I do have a handy intern Claude Code (CC) who loves to agree with me that my ideas are good and turn those ideas into Python Scripts. My initial prompt to CC was quite broad:

I have a substack where I’ve shared what I’ve been reading and thinking about, monthly, for about five years. I’d like to export this, and then extract all the relevant links from the page. A page tends to be structured as a series of paragraphs with the title of the link, the link, and a few sentences of commentary. I’ve exported all the posts, which are in .html format and added them to a google drive folder. Here is an example html post in the drive. Can you please come up with a plan for returning a set of markdown notes, suitable for obsidian, that contain the link, the title, the title + link to the bengoldhaber.substack.com post it came from, and the relevant commentary. Please consider a few different methods to do this, and provide suggestions to me. We’ll then pick one and do it.

As a general rule, it’s good to include examples of the inputs or outputs you want.

CC presented three different options: use beautiful soup to process the html, use API calls to Claude to process the text entirely, or combine the two into a hybrid approach where beatuifulsoup would extract the links and Claude would create the output. I chose the hybrid approach; It’s probably overkill to use an LLM to check every link, I figured it might make it more joyful to read and it would be at most $5 worth of API calls.

I switched it into planning mode (shift-tab in Claude Code, which lets you review and approve a plan before execution). The plan and approach it chose was good: do a dry run with no API calls, create a beautiful soup python script to extract the links, and then if, I approved the out, run the full one with beautiful soup + claude.

I had it auto run and create the code, it’s a.) low cost / low risk operation and b.) I wanted to check twitter.

The only times I needed to get involved:

Requesting for it to output a test file so that I could review the results.

Providing a CSV of post ids to build the canonical URL to link the notes to the correct bengoldhaber.com blog post.

Requesting a batch processing checkpoint in case it times out.

Adding an API key.

Changing the model for API process to claude-haiku-4-5 which is faster and cheaper.

While some of that sounds quite technical, I’m not sure it’s necessary to know what those mean in order to execute this type of project - I think that CC would have suggested these ideas if it had run into trouble.

After about 40 minutes and 57 cents (off by an OOM!) I received 1,133 links as a set of markdown files suitable for Obsidian.

There’s a lot of other things I could do here, like have Claude automatically add tags or have it do some exploratory data processing to see if there are trends. Start to finish, this took me about an hour, with a lot of that time spent, again, surfing twitter.

It’s good to have in your repertoire of moves the patter of 1.) notice small task 2.) have Claude Code can whip something up to do it 3.) check twitter.